|

|

Networked

Cyber Physical Systems at SRI |

|

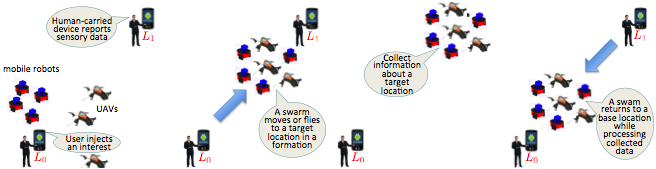

An example of cyber-physical ensembles is a swarm of

programmable ground robots and UAVs that perform a distributed surveillance

mission - e.g., to achieve situation awareness during an emergency - by

moving or flying to a suspicious location, collecting information, and

returning to a base location in a formation that creates an effective sensing

grid. This application also involves human-carried computing/communication

devices such as smart phones that collect/report sensor data and inject

users' interests into the system. Heterogeneous nodes have different

capabilities: UAVs can y and generate an encoded video stream with their

front- and bottom-facing cameras. Additional computing resources also enable

UAVs to extract a snapshot from the encoded video and decode it into JPEG

format. Robots can only move on the ground and decode the encoded snapshots

from UAVs. The network is adaptive in the sense that it morphs in response to

the users' needs.

Fig. 1. User's interest is

injected into the network at a location L0 around which the robots/UAVs are

initially clustered randomly. A noise, recorded at a location L1, triggers

the distributed mission. Figure 1 exemplifies the scenario in which a hybrid swarm moves/flies to a location to investigate a

noise reported by an Android phone. The swarm takes a snapshot of the area

and returns to the base location. In this scenario, perturbations from the

environment (e.g., wind), delayed/incomplete knowledge due to network

disruption, and resource contention cause uncertainty. Real-world actions

must compensate for temporary network disconnections or failures. These

actions can only indirectly control parameters, such as a robot's position,

that can be observed by sensors (e.g., GPS). Therefore, a swarm should

dynamically configure itself, optimize its resources, and provide robust information

dissemination services that adapt to the users' interest in a specific topic.

This interest can induce cyber-physical control (e.g., robot positioning, UAV

trajectory/formation modifications) and trigger additional sensing (e.g.,

snapshot of an area). Goals (e.g., interests and controls) and facts (e.g.,

sensor readings and computational results) can arrive from the users and the

environment at any time, and are opportunistically shared whenever

connectivity exists. Programmable robots and UAVs compute their local

solution based on local knowledge and exchange up-to-date information about

the progress of their solution. These abilities enable a distributed and

cooperative execution approach without the need for global coordination. In a

sample mission, the snapshot extracted from the encoded video stream

(abstraction) may be directly sent to other nodes if the network supports it.

If not, the decoded stream (computation) is sent to other nodes in the form

of knowledge (communication). Each node can potentially engage in many

abstractions, computations, and communications, which are classes of

operations regarded as three dimensions of a distributed computing cube. The

completion of a distributed proof accomplishes the mission. The solution

assigns an approximate target region as a subgoal

to a swarm's (macro-scale controls). The swarm moves or flies to locally

realize the subgoal (micro-scale controls). The

distributed reasoning continues to continuously recompute

the local solutions and adjust macro-scale controls accordingly. To satisfy

the subgoal of micro-scale controls, quantitative

techniques such as virtual potential fields or artificial physics compute the

movements of individual UAV/robots. For details, please refer to [1]. 1. J-S. Choi, T. McCarthy, M. Kim, M-O. Stehr.

Adaptive Wireless Networks as an Example of Declarative Fractionated

Systems

10th Int. Conf. Mobile and Ubiquitous Systems: Computing, Networking

and Services (MOBIQUITOUS'13), Dec.

2013, Tokyo, Japan. The underlying cyber-framework was

extended to support various abstractions and APIs for programming a

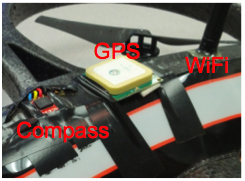

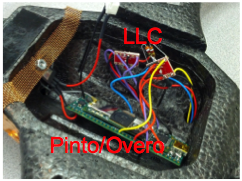

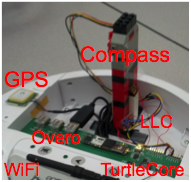

heterogeneous testbed based on the Parrot AR.Drone 2.0, iRobot Create platforms, and various Android devices. Heterogeneous

Testbed: The AR.Drones

and Creates were equipped with additional computing resources (Gumstix modules) and sensors (GPS and digital compass)

shown below to increase their autonomy and improve capabilities such as

localization in physical space. The Gumstix

computer is also equipped with a WiFi antenna which provides communication via a peer-to-peer ad-hoc

network. The testbed uses the Google Nexus S

running CyanogenMod 7.2 with

built-in digital compass, GPS, and camera as a standard Android device. As

for the software side, the AR.Drones (firmware ver.

2.3.3) are controlled via WiFi, using a modified

version of JavaDrone (ver. 1.3) [14] for sending

commands and interpreting information from the drone's onboard sensors and

video cameras. JavaDrone was modified to support

video reception from the AR.Drone 2.0 by extracting video frames

from the stream and passing them directly to the framework rather than

attempting to decode them. This encoded frame data can be decoded later when

it is deemed necessary, possibly on another device with more available

computing resources. The Creates are controlled via a direct serial port

connection from the Gumstix using the cyber-API which supports sending movement and audio commands.

We tested an autonomous outdoor

mission for distributed surveillance where a swarm of 3 AR.Drones,

4 iRobots, 2 Android phones in a 70m x 35m parking

lot collects information about a target location. It is fully distributed and shows the

core ideas of autonomous control of fractionated system. The localization is done without

infrastructural support like a base station as a command/control post or

external camera-based localization. First, a userŐs interest injected as a

goal. The other android app is used to report a sensor data to trigger the

mission. The drones/robots are starting to

move towards the target location, and take a picture then come back to the

position where a user located.

|

|||||||||||||||||||||

|

|

|

|||||||||||||||||||||

Last updated: Mar

12, 2014